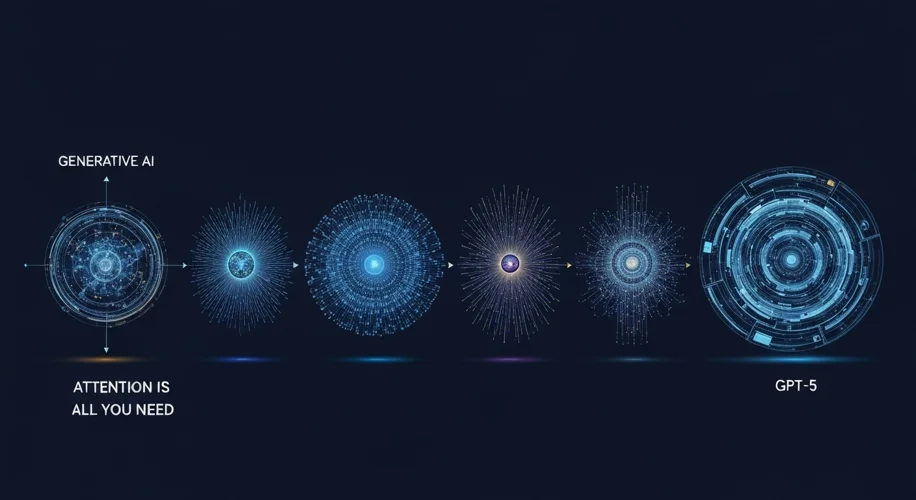

It feels like just yesterday that the artificial intelligence landscape was buzzing about a paper called “Attention Is All You Need.” Published in 2017, it introduced the Transformer architecture, a concept that fundamentally shifted how we approach natural language processing and, by extension, much of the AI we interact with today.

Before the Transformer, AI models often struggled with understanding long-range dependencies in text – essentially, remembering what was said much earlier in a conversation or document. Think about trying to understand a complex novel, but you can only remember the last sentence you read. That was a bit like the challenge AI faced.

The “Attention” mechanism, however, allowed models to weigh the importance of different words in the input sequence, regardless of their position. This meant AI could “pay attention” to the most relevant parts of the data, leading to a dramatic improvement in tasks like translation and text generation.

This foundational paper truly unlocked a new era. We saw the rapid development of large language models (LLMs) like GPT-2 and GPT-3, which demonstrated remarkable capabilities in writing, coding, and answering questions. These models, built upon the Transformer architecture, started to feel less like tools and more like collaborators. I remember the early days of interacting with these systems; the progress was palpable, moving from somewhat clunky responses to impressively coherent and contextually aware outputs.

Now, we’re on the cusp of what’s next, with discussions around GPT-5 and beyond. The anticipation is high, and rightly so. We can expect these upcoming models to push the boundaries even further, offering enhanced understanding, more nuanced creativity, and perhaps entirely new ways of interacting with information.

From my perspective, having spent decades in the tech industry, this evolution is both exhilarating and a call for thoughtful development. As these AI systems become more powerful, the importance of considering their ethical implications grows. We need to ask ourselves: How do we ensure these tools are used responsibly? How do we mitigate potential biases that can creep into their training data and, consequently, their outputs? How do we prepare society for the changes these advanced AIs will inevitably bring?

It’s not just about building more capable AI; it’s about building AI that benefits humanity. The journey from that pivotal 2017 paper to the sophisticated models of today, and looking ahead to what GPT-5 might offer, is a testament to human ingenuity. However, with great power comes the responsibility to guide its development with wisdom and foresight. The conversation needs to be ongoing, involving not just the engineers but all of us, as we shape the future of this transformative technology.