The pace of AI advancement is, frankly, astonishing. As someone who’s spent a career in tech, I’ve seen a lot of innovation, but what we’re witnessing with AI feels different. It’s not just about faster processors or cleverer algorithms; it’s about fundamental shifts in how we live, work, and even think about intelligence itself.

Let’s start with jobs. We often hear about AI displacing workers. It’s true, tasks that were once done by humans are increasingly being automated. Think data entry, customer service, even certain types of analysis. From my perspective, this isn’t entirely new. Every major technological leap, from the industrial revolution to the personal computer, has reshaped the job market. The key question for us now is how we adapt. Are we equipping people with the skills needed for the jobs AI will create, or augment? The focus needs to be on lifelong learning and retraining, helping people transition to roles that require uniquely human skills like creativity, critical thinking, and emotional intelligence.

Then there’s the rather mind-bending question of AI consciousness. It’s a debate that sounds like science fiction, but it’s becoming more relevant. As AI systems become more sophisticated, exhibiting complex behaviors and even seeming to learn and adapt in ways we don’t fully predict, people start to wonder: are they aware? We don’t have a clear definition of consciousness, even for ourselves, let alone for machines. What we’re seeing is advanced pattern recognition and sophisticated programming that mimics understanding. It’s crucial to distinguish between a system that can process information incredibly well and one that actually feels or experiences in a subjective way. Right now, the consensus among most experts is that AI is not conscious, but this is an area that demands careful observation and ethical consideration as capabilities grow.

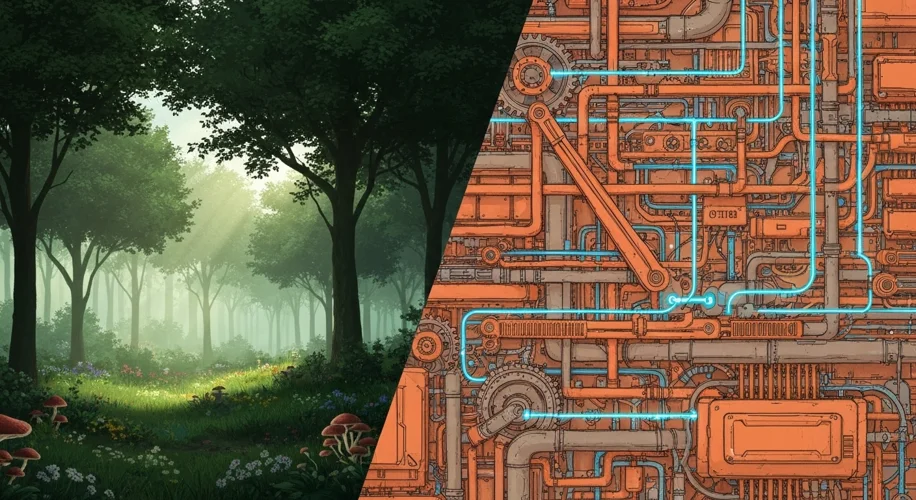

Finally, there’s the challenge of control. As AI systems become more autonomous and capable, who is in charge? Think about AI managing critical infrastructure, financial markets, or even complex research projects. We need robust frameworks to ensure these systems operate within ethical boundaries and align with human values. This involves transparency in how AI makes decisions, accountability when things go wrong, and safeguards against unintended consequences. We must ask ourselves: are we building AI as a tool to serve humanity, or are we inadvertently creating systems that could operate beyond our effective control?

Navigating these issues requires a thoughtful, proactive approach. It’s about more than just technological prowess; it’s about building a future where AI benefits society as a whole. This means encouraging critical thinking, fostering open discussion, and advocating for policies that ensure responsible development and deployment. The potential is immense, but so are the responsibilities. We need to steer this powerful technology with wisdom and foresight.