Alright, let’s talk about what’s actually slowing down AI development right now. We hear a lot about AI’s potential, but getting it to work in the real world? That’s a whole different story. Most teams I know are wrestling with a few key blockers, and it’s usually not just one thing.

The GPU Gauntlet: Availability and Cost

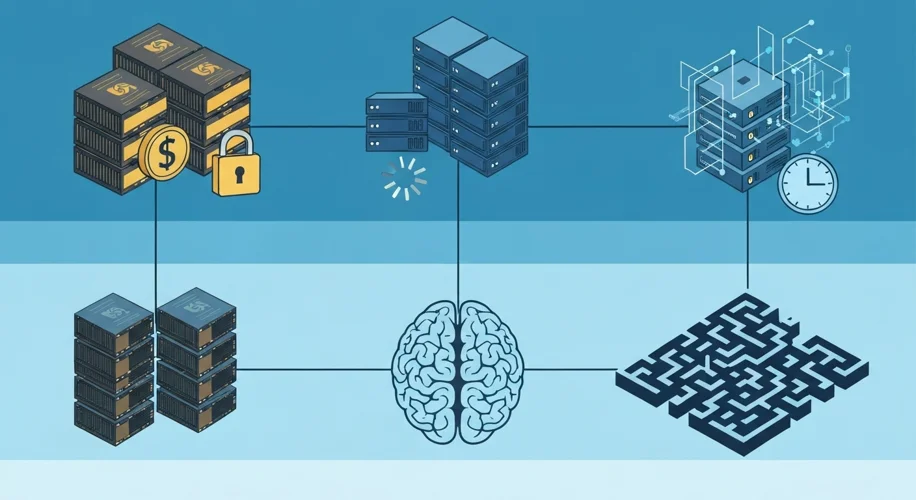

First up, the hardware. You need serious computing power for AI, and that means GPUs. Think NVIDIA’s H100s or AMD’s Instincts. Getting your hands on them is tough. Demand is through the roof, leading to long waitlists and sky-high prices. It’s not just buying them outright either; cloud rental costs can rack up incredibly fast. Many startups and even larger companies struggle to afford the sheer volume of compute they need, forcing them to get really creative with resource allocation or even scale back ambitious projects.

Model Deployment & Inference: The Waiting Game

Okay, so you’ve trained your model. Now what? Getting it out the door and making predictions (that’s inference) is another hurdle. Large Language Models (LLMs) and complex computer vision models can be huge. Deploying them efficiently so they respond quickly is tricky. You need optimized software, specialized hardware, and careful tuning. Sometimes, the latency – the time it takes for the model to give an answer – is just too high for practical applications. Imagine a chatbot that takes ages to reply, or an image recognition system that lags behind reality. That’s the inference challenge.

Data Infrastructure: The Unsung Hero (and Villain)

And then there’s the data. AI models are only as good as the data they’re trained on, and managing that data is a beast. For many modern AI applications, especially those using techniques like Retrieval-Augmented Generation (RAG), we’re talking about massive datasets and specialized databases. Vector databases, for example, are crucial for storing and searching data efficiently, but setting them up and maintaining them can be complex. Building robust RAG pipelines, which feed relevant information to AI models, requires meticulous data cleaning, indexing, and retrieval strategies. If your data infrastructure isn’t solid, your AI will stumble, no matter how good the model architecture is.

So, What’s the Biggest Blocker?

Honestly? It’s a mix. For many, the immediate pinch is GPU cost and availability. You can’t even start training without the hardware. But once you get past that initial hurdle, inference speed and data pipeline reliability become the real bottlenecks. My take is that while GPUs are the most visible roadblock, the underlying data infrastructure and the challenges of efficient inference are the deeper, more systemic issues that teams are constantly battling. Getting all three right is the real challenge in scaling AI today.