Okay, so hear me out… when we talk about AI, it’s usually about AGI, job displacement, or the latest cool chatbot. But there’s this massive, underlying issue that’s kind of being overlooked: AI’s insatiable appetite for energy and resources.

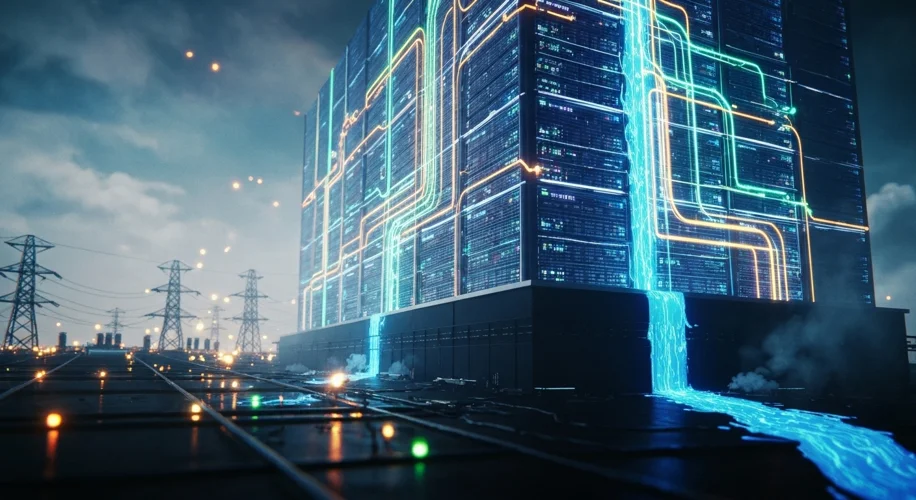

Think about it. Training those massive AI models, the ones that can write code, generate images, or even power your favorite virtual assistant, takes a ton of electricity. And it’s not just the training. Every time you use an AI service, servers are humming, consuming power. This isn’t some future problem; it’s happening right now, and it’s putting a serious strain on our existing power grids.

In my own work, diving deep into AI for my PhD, I’ve seen firsthand the computational power required. It’s immense. And as AI adoption explodes, so does this demand. We’re talking about a potential crisis that could lead to widespread blackouts and shortages, possibly as soon as 2030-2040.

This energy demand doesn’t just mean more electricity. It has cascading effects. Power plants, especially those relying on fossil fuels, ramp up production, which means more carbon emissions and accelerated climate change. Plus, data centers, the physical homes of AI, are massive water consumers for cooling. So, AI’s thirst isn’t just for electricity; it’s for water too.

Focusing solely on AGI or job displacement feels like a distraction from this immediate infrastructural and environmental challenge. We’re building this incredibly powerful technology, but are we building the infrastructure to support it sustainably? The data suggests we’re not keeping pace.

So, while it’s exciting to think about what AI can do, we really need to start talking about the cost – not just the financial one, but the environmental and infrastructural cost. We need to be thinking about how we power this future, and how we do it without overwhelming our planet and our grids. It’s a critical conversation, and honestly, it’s one we can’t afford to ignore.