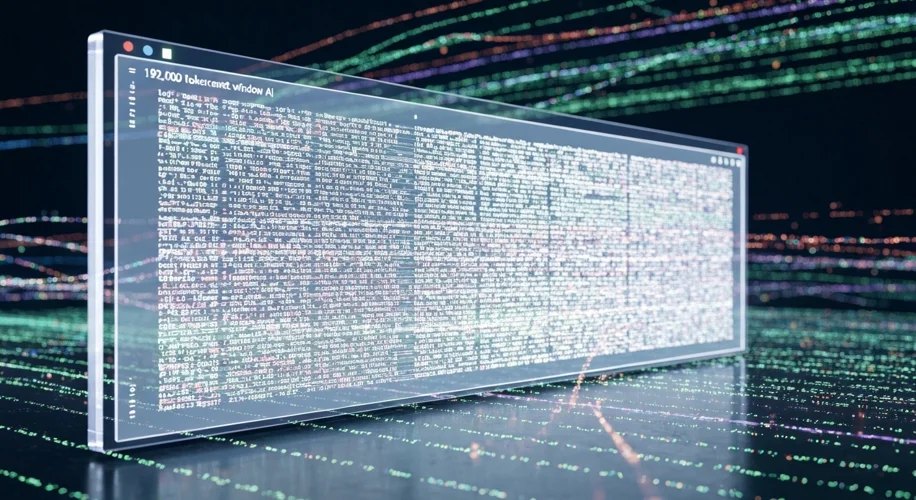

It feels like just yesterday we were marveling at AI’s ability to hold a decent conversation. Now, we’re seeing major leaps in how much information these tools can process at once. One significant development is the expanded context window in ChatGPT Plus, now capable of handling a massive 192,000 tokens.

What exactly does that mean for us, the users? Think of a context window like a short-term memory for the AI. The larger the window, the more information it can “remember” from your conversation or the documents you provide. Before, these windows were much smaller, meaning the AI would often “forget” earlier parts of a long discussion or a lengthy document.

With 192,000 tokens, ChatGPT Plus can now effectively process and recall information equivalent to hundreds of pages of text. This isn’t just a minor upgrade; it opens up a whole new realm of possibilities for how we interact with AI.

Imagine feeding an entire novel into the AI and asking it to summarize key themes, analyze character development, or even rewrite a chapter in a different style. Or consider complex technical manuals or legal documents – the AI can now digest these and answer intricate questions without losing track of the details.

From my perspective, this enhanced capability has profound implications for productivity. Researchers can feed large datasets or research papers to the AI for analysis. Students can use it to understand dense academic texts. Even creative writers can leverage it to maintain continuity across long narratives or brainstorm complex plotlines.

However, as with any advancement in AI, it’s crucial to consider the broader implications. A larger context window means AI can potentially understand and retain more about us and our interactions. This raises important questions about data privacy and how this enhanced memory is managed. We must ensure that these powerful tools are developed and used responsibly, with clear guidelines on data handling and user consent.

This jump to 192K tokens is a clear indicator of the rapid progress in AI development. It’s moving beyond simple chat functions to becoming a more robust tool for deep analysis and complex task management. As users, understanding these technical leaps helps us appreciate both the potential benefits and the ethical considerations that come with increasingly sophisticated artificial intelligence. It’s an exciting time, but one that also calls for thoughtful engagement with the technology shaping our world.