Okay, so hear me out… we talk a lot about AI getting exponentially better, right? Like, it’s always just around the corner from becoming superintelligence or something wild. But what if I told you that, in a way, AI has already finished its exponential improvement phase?

I know, I know, it sounds wild. We’re seeing new AI models pop up constantly, each seeming smarter than the last. We’ve got AI that can write code, generate art, diagnose diseases, and even drive cars (sort of). It feels like we’re on a rocket ship to the future.

But let’s be real for a second. Think back to the early days of large language models (LLMs). The jump from, say, GPT-2 to GPT-3 was massive. Then GPT-3 to GPT-4 felt like another huge leap. We were seeing capabilities emerge that felt entirely new. It was like watching a baby learn to talk, then walk, then run, all in a matter of months.

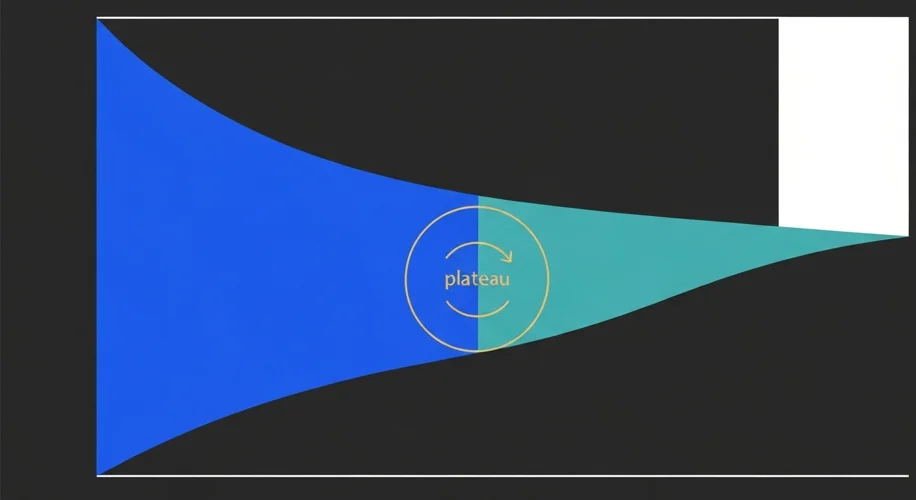

Now, though? The advancements still feel significant, don’t get me wrong. But are they exponential in the same way? Or are we seeing more of a linear, albeit very fast, improvement curve? We’re refining existing architectures, making them more efficient, and training them on even more data. It’s like a chef perfecting a recipe rather than discovering a completely new cuisine.

What do I mean by this? Well, exponential growth implies a doubling or tripling of capability over short, regular intervals. Early AI development, especially in deep learning, showed this kind of explosive progress. Suddenly, things that were impossible became trivial. Image recognition went from a research curiosity to something your phone does seamlessly.

Today, while the progress is undeniable, the nature of that progress feels different. It’s more about incremental gains, better understanding of existing concepts, and overcoming practical limitations like computational cost and data bias. We’re hitting diminishing returns in some areas, where the effort and resources required to make a model just a little bit better are becoming enormous.

Consider the benchmarks. Many of the core tasks AI is good at today were already showing promise years ago. The difference is scale, polish, and accessibility. It’s like upgrading from a flip phone to a smartphone – it’s a massive quality-of-life improvement, but the fundamental concept of a personal communication device was already there.

This isn’t to say AI isn’t amazing or that progress will stop. Far from it! We’re still going to see incredible innovations. But maybe we need to adjust our expectations of the rate of improvement. The explosive, paradigm-shifting leaps might be behind us for now, replaced by a steady march of refinement and application.

It’s like hitting the optimal speed on a highway. You’re moving fast, but you’re not accelerating infinitely. Maybe the “exponential phase” of AI’s foundational breakthroughs has already happened, and we’re now in the phase of applying and optimizing that incredible power. It’s an unpopular opinion, for sure, but one worth thinking about as we navigate this rapidly evolving landscape.