Alright, so let’s talk about something super important happening in the AI world: China is now enforcing some of the strictest laws globally when it comes to labeling AI-generated content.

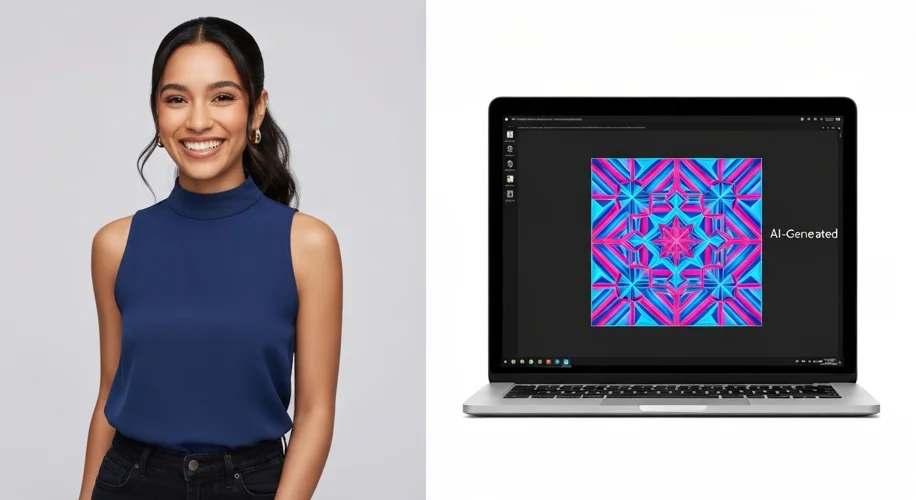

Starting September 2nd, 2025, any deepfakes or AI-generated images, videos, or audio that are shared online in China must have a clear label. Think of it like a watermark or a tag that tells you, “Hey, this wasn’t made by a human.” This isn’t just for new content either; it applies to anything shared on platforms like social media, messaging apps, and websites.

Why is this a big deal?

We’ve all seen those wild AI-generated images or videos that look incredibly real. While it’s cool tech, it also opens the door to some serious issues. Misinformation, fake news, and even malicious deepfakes can spread like wildfire if we can’t tell what’s real and what’s not. This new mandate in China is a pretty bold move to tackle that.

What does this mean for us?

For starters, it’s a step towards greater transparency. When you see content, you’ll know if an AI was involved in its creation. This is crucial for building trust in the digital age. Imagine scrolling through your feed and not being sure if that photo of a celebrity is real or if that news report is actually a deepfake. It’s a slippery slope!

This kind of labeling isn’t just about saying “AI made this.” It’s about accountability. It helps users understand the source of information and encourages creators to be upfront about using AI tools. Plus, it makes it harder for bad actors to spread deceptive content without anyone knowing.

Should the rest of the world follow suit?

Honestly, I think we should seriously consider it. The genie is out of the bottle with AI-generated content, and it’s only getting more sophisticated. While it’s awesome for creativity, it’s also a massive challenge for truth and authenticity online.

Imagine if platforms globally adopted similar clear labeling standards. It could create a more honest and trustworthy online environment. We could all be more critical consumers of digital content, knowing when to apply a healthy dose of skepticism. It’s about empowering users with information, not just letting AI run wild.

This isn’t about stifling innovation; it’s about guiding it responsibly. As AI becomes more integrated into our lives, having clear guidelines on how its output is presented is essential. It’s a conversation we need to have, and China’s strict new law is definitely a major point to consider.