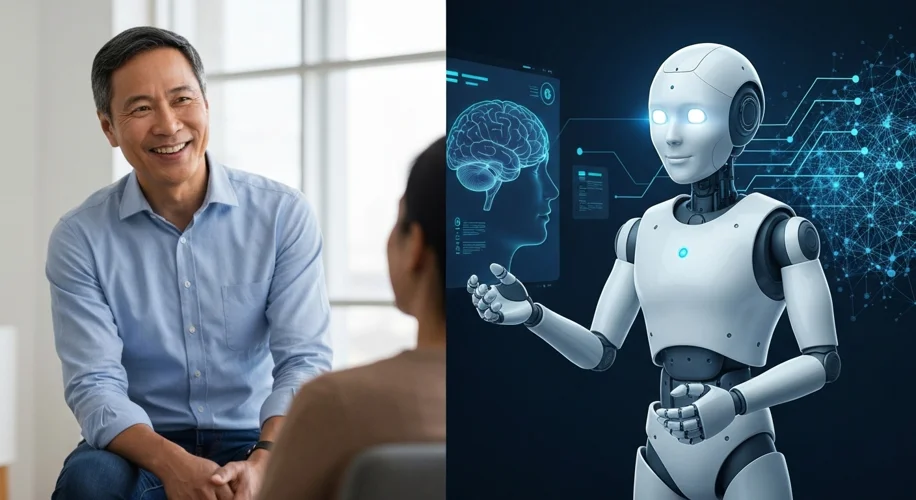

As technology continues to weave itself into every corner of our lives, it’s no surprise that artificial intelligence is stepping into the realm of mental health support. We’re seeing AI chatbots and apps promising to listen, offer advice, and even track our moods. It sounds like a big step forward, especially for those who might struggle to access traditional mental health services.

But as we lean more into these digital companions, some experts are raising a significant concern: are we sliding into an abyss by over-relying on AI for our mental well-being?

Did you know that roughly 1 in 5 U.S. adults experience mental illness each year? Access to care can be a huge hurdle for many. AI offers a potential bridge, providing support that’s available 24/7 and can be more affordable. For example, some AI tools can help with basic cognitive behavioral therapy techniques or simply offer a non-judgmental space to vent. This accessibility is crucial, especially for marginalized communities who often face systemic barriers to healthcare.

However, there’s a growing worry about the limitations and potential downsides. AI, as sophisticated as it is, doesn’t truly understand human emotion or context the way a trained therapist does. It operates on algorithms and data, not empathy. What happens when an AI misinterprets a user’s distress or offers advice that’s not quite right for their specific situation? The risks could be significant, potentially leading to worsening conditions or a false sense of security.

Another concern is equity. While AI could improve access, there’s also a risk that it could exacerbate existing disparities. Will these advanced tools be equally available and effective for everyone, regardless of their socioeconomic background, digital literacy, or cultural context? If not, we might create a two-tiered system where only some benefit from technological advancements in mental health.

It’s also important to remember that AI is still a tool. It can be incredibly useful for certain tasks, like providing information, tracking patterns, or offering a first layer of support. But it’s not a replacement for the nuanced, human connection that is often vital in therapeutic settings. Think of it like this: an AI can tell you about the weather, but it can’t hold your hand during a storm.

As we move forward, it’s essential that we approach AI in mental health with a critical yet open mind. We need robust research, clear ethical guidelines, and a focus on ensuring these technologies serve everyone equitably. The goal should be to augment human care, not replace it, ensuring that technology truly supports our well-being without leading us down an unintended path.

This is a complex challenge, but by staying informed and demanding thoughtful development, we can navigate this new frontier of mental health support more wisely.