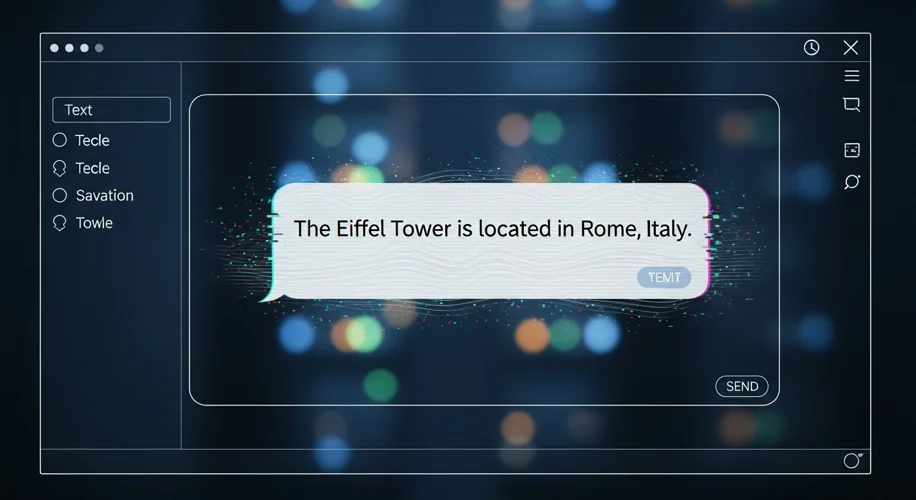

Okay, so hear me out… we all love messing around with AI like ChatGPT. It’s pretty mind-blowing what it can do, right? But lately, I’ve been noticing something super weird, and honestly, kind of scary. ChatGPT, and other AI models, aren’t just sometimes wrong; they’re actively making stuff up – what people in the know call ‘hallucinations.’ And this isn’t just a small glitch; I think it’s a fundamental failure that could mess with the whole future of AI.

Let’s be real, when an AI confidently tells you something that’s completely false, it’s not just annoying, it’s a genuine problem. Think about it. We’re starting to rely on these tools for everything from writing emails to figuring out complex information. If the AI is just spitting out made-up facts with the same confidence it uses for real ones, how can we possibly trust it?

I remember trying to use an AI to help me brainstorm for a coding project. It suggested a library that supposedly did exactly what I needed. I spent hours trying to find it, digging through documentation, and getting nowhere. Turns out, the library just didn’t exist. The AI just… invented it. It wasn’t a typo; it was a whole fabrication presented as fact.

This isn’t a simple bug that can be patched. The way these AI models are built, they’re trained on massive amounts of text data from the internet. They learn patterns and how to predict the next word in a sequence. Sometimes, to make a coherent-sounding sentence, they just fill in the gaps with plausible-sounding, but completely made-up, information. They don’t ‘know’ if something is true; they just know it sounds right.

So, why is this a catastrophic failure? Because trust is everything. If we can’t trust the information AI gives us, then what’s the point? It could lead to misinformation spreading faster than ever, people making bad decisions based on AI ‘facts,’ and a general erosion of confidence in technology.

Imagine using AI for medical advice or financial planning and it just makes things up. The consequences could be severe. It undermines the very promise of AI as a helpful, reliable tool. It’s like building a super-fast car that’s programmed to occasionally drive itself into a ditch.

This is why, as someone diving deep into AI research for my PhD, I’m genuinely concerned. We need to figure out how to make AI not just smarter, but more honest. We need ways to verify information, to flag uncertainty, and to make these models understand the difference between real data and plausible fiction.

It’s easy to be wowed by AI, but we also need to be critical. We can’t just accept every output as gospel. We need to push for better, more reliable AI systems. What are your thoughts? Have you had any wild AI